Localization is an operating system for scaling content safely across markets.

In this master guide, Mark Sheehy, Senior Marketing Localization Engineer at McAfee, explains how enterprise teams balance compliance, cost, and creativity through structured workflows, vendor governance, and hybrid human–AI models. Covering six core areas—from market adaptation to measurable ROI—it offers a practical blueprint for marketers building global content systems that work at scale.

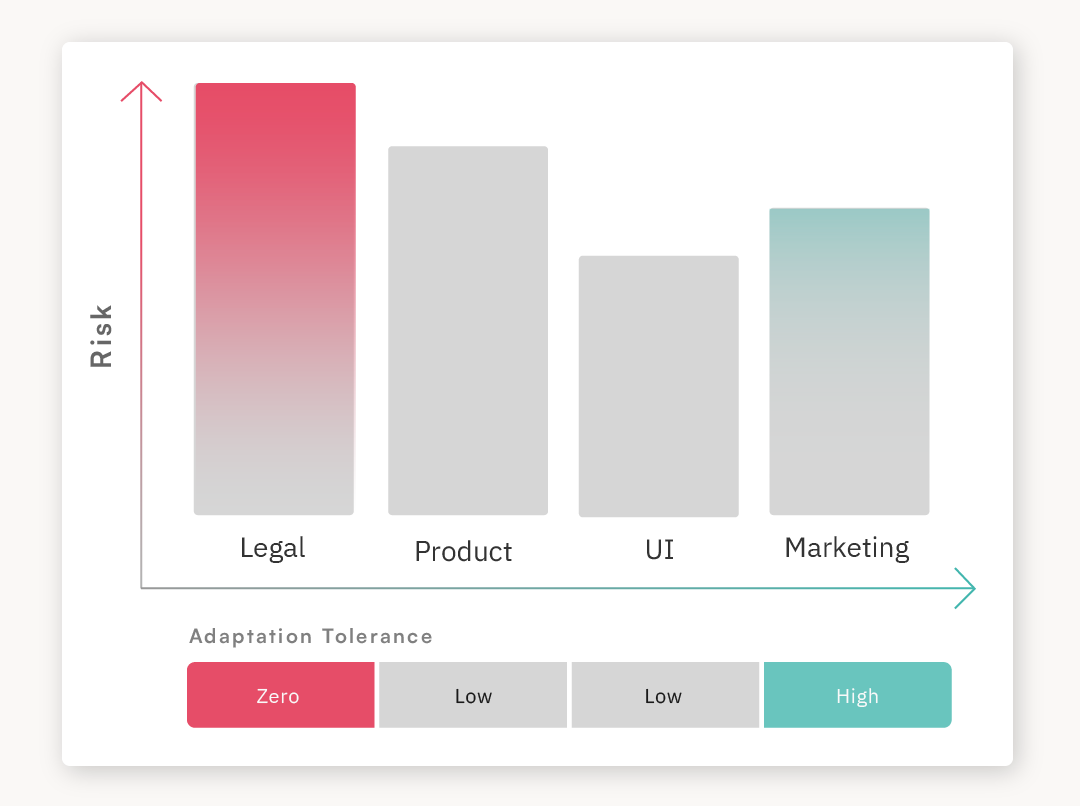

Treat localization as four parallel workstreams, not one translation step. Each has different risks, owners, and quality checks.

What it is: contracts, privacy notices, end-user license agreements (EULAs), and regulatory disclosures.

Risk: liability, fines, and brand damage.

Key enablers: legally trained linguists, red-line legal review, jurisdictional counsel sign-off, version-controlled source files, and a full audit trail (who changed what and when).

Non-negotiables: no raw machine translation. Use machine translation with human post-editing only where counsel approves.

What it is: Software strings, help center articles, release notes.

Risk: a broken user experience, safety issues, and a higher support load.

Key enablers: an enforced glossary and style guide, translation-memory reuse, in-context review (staging builds or screenshots), right-to-left script and length checks, and automated truncation flags.

Non-negotiables: avoid concatenation. Ship full sentences and test on real layouts before release.

What it is: Websites, ads, campaigns, videos, sales assets.

Risk: tone-deaf messaging and weak conversion.

Key enablers: a market-specific creative brief, transcreation, in-market reviewers, A/B testing of calls to action and value phrasing, and visual adaptation to local norms.

Non-negotiables: idioms rarely port. Judge copy by engagement and conversion rather than literal fidelity.

What it is: The system that keeps everything consistent at scale.

Risk: rework, cost overruns, and inconsistency.

Key enablers: clear ownership of translation memory and terminology, versioned source control, service-level agreements and defined roles (Responsible–Accountable–Consulted–Informed), language-quality assurance scoring, vendor-performance dashboards, connectors between content and translation systems (e.g., content-management system to translation-management system), and a change-log policy.

Non-negotiables: source sign-off before handoff. Maintain a shared issue tracker and conduct quarterly quality and business reviews.

Localization often breaks down because offers, products, and messaging differ by country. If you don’t classify these differences and build them into your workflow, they slip through the cracks. Automation helps, but constant changes still need human review.

Some markets, such as Japan, require formal linguistic structures and honorifics —for example, adding “-san” or “-sama” after a person’s name.

Claims like “24/7 phone support” may be true in some regions but not in others. Always confirm availability by market before translating.

Errors such as outdated phone numbers or unavailable services undermine trust and can cause compliance issues.

Localize both currency and numeric formatting —for example, $1.000,50 in Germany vs. $1,000.50 in Ireland.

Adapt for local regulations such as the General Data Protection Regulation (GDPR – European Union), the California Consumer Privacy Act (CCPA), and China’s Personal Information Protection Law (PIPL).

“10/11/2025” means October 11 in Europe but November 10 in the United States.

Use metric or imperial units as appropriate.

Include locally preferred options such as Alipay or WeChat Pay in China, iDEAL in the Netherlands, and PayPal in North America.

References like “holiday season” may resonate in the United States, “Christmas” in Europe, or completely different holidays elsewhere.

Always adapt to local calendars and observances.

Localize spelling and phrasing —for example, Spanish in Spain vs. Latin America; English in the United Kingdom vs. United States (“colour” vs. “color”).

Translation Briefs:

Include notes that flag market-specific differences for translators.

In-Market Reviewers:

Native colleagues review content inside the translation system to validate tone, terminology, and accuracy.

Source Reviews:

Push back when English content makes assumptions that don’t fit globally.

Keep the Source Generic:

Phrase copy conservatively to avoid over-promising (“Support available” instead of “24/7 support”).

At enterprise scale, localization can cover more than forty languages across legal, product, and marketing content. Planning early for scope, cost, and vendor management prevents overruns and keeps global releases on schedule.

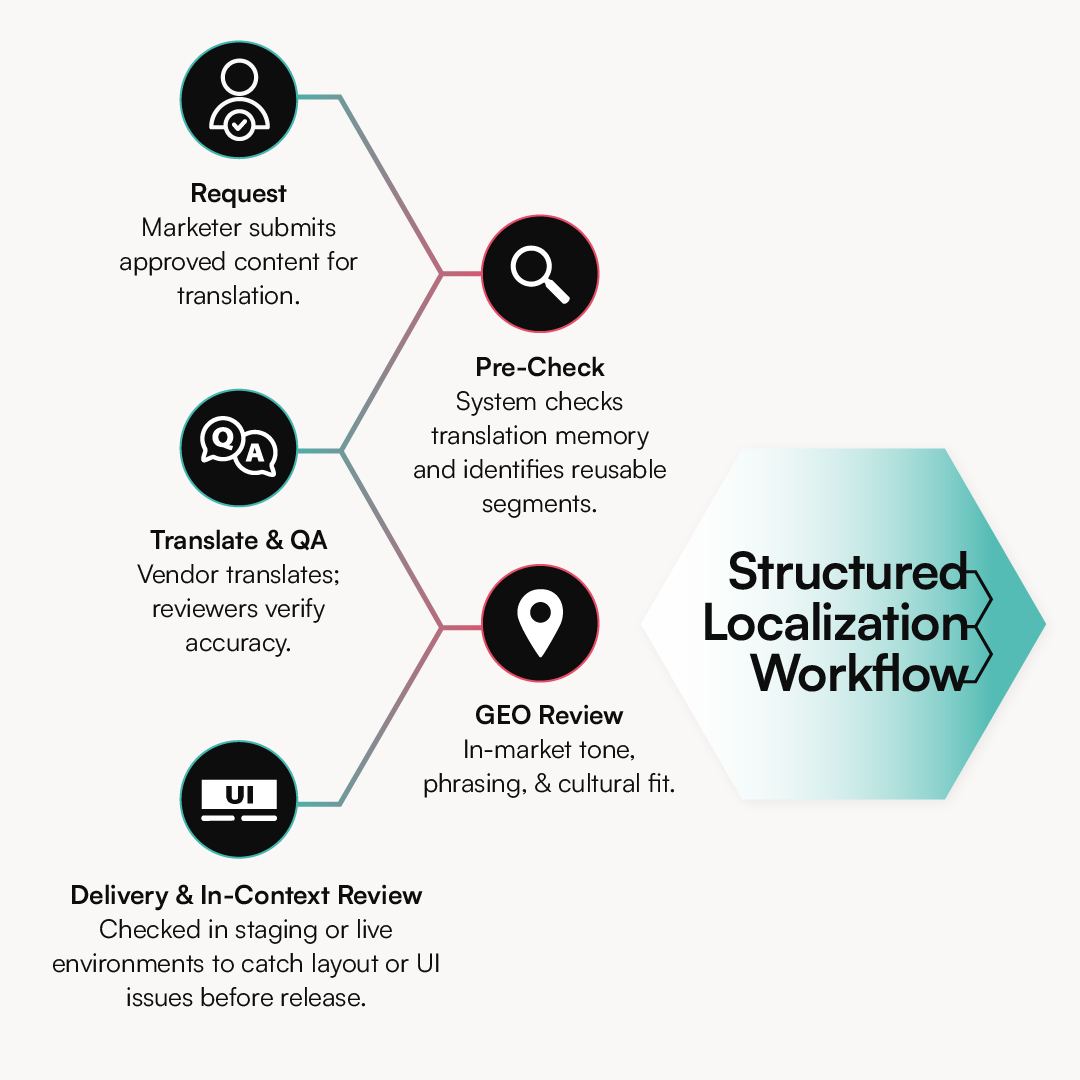

Each content type requires different review steps and risk tolerance.

Planning these tiers in advance sets realistic budgets and delivery expectations.

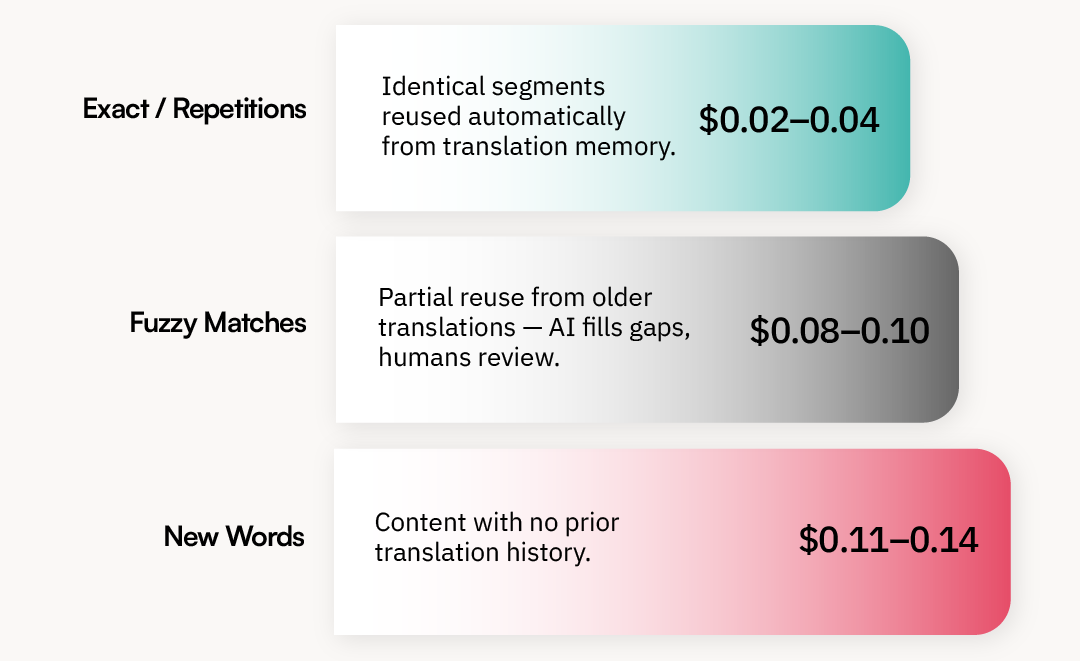

Translation memories store previous work so recurring phrases can be reused automatically.

Legal and technical material cost more than general marketing or interface text.

Standard delivery (two to five business days) uses the base rate.

Expedited or weekend work can increase cost by 1.5× to 2.5×.

Takeaway: cost scales with content volume, complexity, and urgency.

Specializes in one language or region.

Manages many languages through one central hub.

Full-time linguists employed directly by the organization.

Use a cost-efficient multi-language vendor for high-volume work, a premium specialist vendor for quality spot checks and legal content, and in-house linguists for priority markets.

Combine this setup with defined service-level agreements (SLAs) to keep expectations clear:

Shortcuts in localization save time once but cost far more later. Every missed review, skipped source check, or untrained linguist creates localization debt—errors that multiply across markets and reappear in every future release.

Mistake: Updating product names, claims, or terminology after localization work has begun.

Impact: Expensive rework and inconsistent messaging across regions.

Fix: Lock the source. Review and approve all English content before translation begins.

Result: Prevents retranslation loops and keeps all markets aligned to the same approved copy.

Mistake: Assuming literal translation will work everywhere.

Impact: Tone-deaf or offensive messaging that damages trust.

Fix: Budget for transcreation and in-market review to ensure tone and phrasing feel natural.

Result: Keeps brand voice authentic and culturally relevant.

Mistake: New linguists repeat old errors—especially in tone, concatenation, and glossary use.

Impact: Rising error rates and inconsistent quality.

Fix: Create short onboarding modules and maintain shared documentation of common issues.

Embed style guides directly in translator environments and track recurring problems.

Result: Builds translator familiarity and reduces error rates over time.

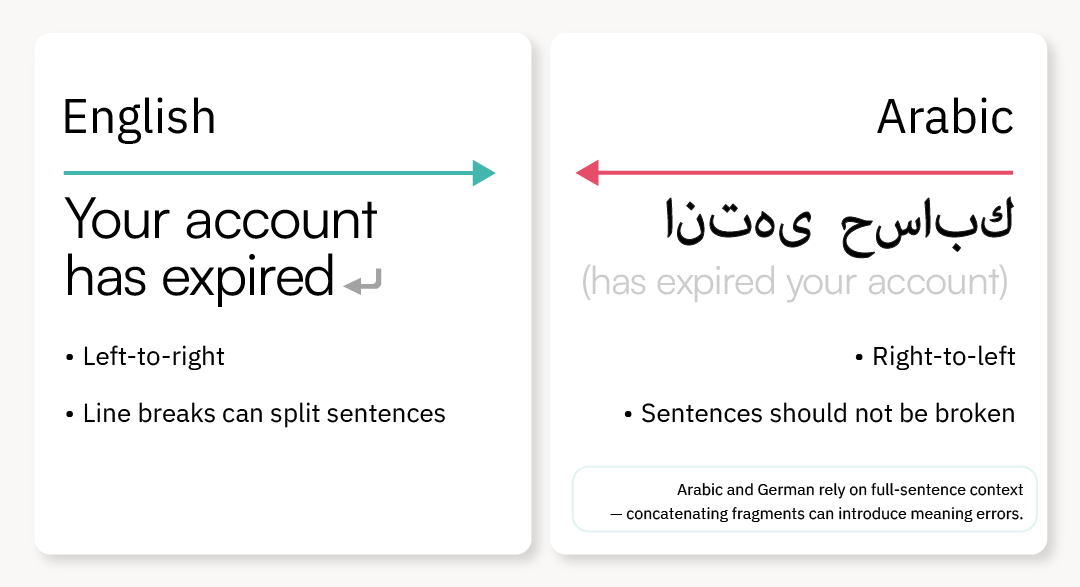

Mistake: Breaking sentences into fragments that rely on order to make sense.

Impact: Languages with different grammar structures can’t reassemble them correctly.

Broken example:

Your account has

expired

Correct example:

Your account has expired.

Fix: Write complete sentences and design interface strings accordingly.

Result: Eliminates translation errors and preserves natural phrasing.

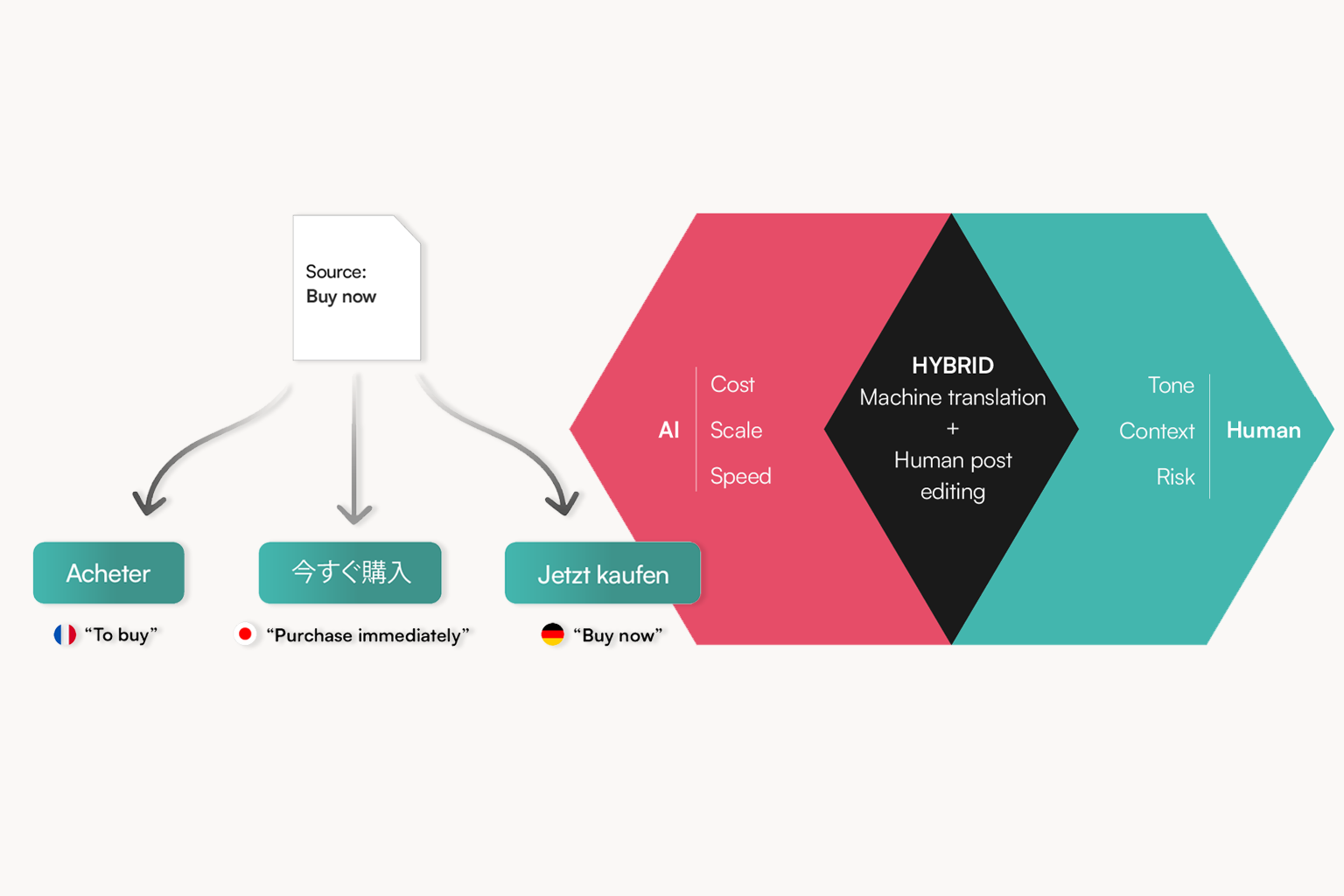

Artificial intelligence has become a core part of modern localization, but it’s not a replacement for human judgment. AI accelerates speed, consistency, and repetitive work, while people preserve accuracy, nuance, and brand trust. Finding the right hybrid balance determines whether automation saves money or creates rework.

Most enterprise workflows now include built-in machine translation engines such as DeepL or custom large language models.

These handle first-pass translations for low-risk content like FAQs or product updates.

Benefit: faster turnaround and lower cost.

Limitation: always requires post-editing by a linguist before publishing.

AI-driven tools scan web pages, screenshots, and application interfaces for untranslated text, broken layouts, or punctuation issues.

Benefit: catches layout and text errors before launch.

Limitation: visual or cultural nuance still needs a human eye.

Previously, designers rebuilt every banner or graphic manually — especially those containing embedded text that had to be re-created for each language.Today, tools such as Microsoft Copilot and Adobe Firefly can automatically generate market-specific image variants with translated on-image text and improved visual fidelity.

Benefit: speeds up delivery of on-brand visuals across languages.

Limitation: design composition and emotional tone still require human validation.

AI translation powers live chat and support bots where immediacy matters more than perfect nuance.

Humans step in for complex or sensitive queries.

Benefit: near-instant multilingual response without extra headcount.

Limitation: still limited for escalation and brand tone.

The best programs use automation to scale repetitive tasks while reserving human review for high-impact or high-risk content.

Example hybrid setup

This approach delivers speed, savings, and quality without adding compliance risk.

Localization only shows its real value when it connects directly to business outcomes. Speed and volume matter, but the strongest programs measure performance, quality, and return on investment once content is live.

Localized experiences almost always outperform English-only versions in non-English markets.

Clear onboarding, help content, and user interfaces in the local language drive stronger engagement and lower churn.

SaaS and subscription companies frequently report 15–25 percent higher renewal rates when support and onboarding are localized.

Key takeaway: localization doesn’t just acquire users — it helps keep them.

Customer-experience teams track the same effect through satisfaction scores.

Markets with mature localization programs consistently post higher Customer Satisfaction (CSAT) and Net Promoter Scores (NPS) because customers feel understood and supported.

Result: improved brand reputation and advocacy across regions.

Strong teams measure their own process performance as carefully as audience response:

Combine external and internal data to demonstrate value:

When these indicators move together, leadership can see that localization is not a cost center but a growth enabler.